“Alexa, change audio output to my Bluetooth speaker”, you might know what the next response would be “Sorry, I didn’t get that…” Well, that era might just be over as major players like Amazon, Google and Apple are now racing to integrate AI models within their digital assistants. So, what does this mean for your Echo Dot and your wallet?

The AI Push

So yeah, the tech giants are embedding powerful AI models into their platforms, here’s how they’re doing it:

- Amazon is integrating Claude from Anthropic into Alexa, aiming to make Alexa smarter and more conversational.

- Google is adding Gemini, their proprietary AI model, to Google Assistant, promising a more nuanced, context-aware experience.

- Apple is bringing ChatGPT based technology to Siri, which could make Siri more responsive and versatile. This is my favourite one, not because it’s Apple, but it’s GPT, duh.

Now these integrations promise to make digital assistants more than just voice-command tools, they’re being positioned as virtual AIs that can understand context, recall previous interactions, and provide more in-depth responses, and that’s something we actually want, well something I want. However, there’s a big drawback: these AI models demand significantly higher processing power than the legacy assistants we’ve been using for years.

Why Old Devices Can’t Keep Up

Most of us are familiar with Amazon’s Echo Dot, Google’s Nest Mini, and Apple’s HomePod Mini, they’re compact, relatively affordable devices designed to do simple tasks. These legacy devices were never intended to handle the heavy lifting of AI-driven language models. The hardware inside a $50 Echo Dot, for example, simply doesn’t have the processing capability to run a model like Claude, Gemini, or ChatGPT natively.

To bring these AI models to the existing devices(which in my opinion is next to impossible), companies are facing two major options:

- Release New Hardware with Enhanced Processing Power: Well, this isn’t actually bringing the LLMs to existing devices, it’s making newer versions of those models, but you get where I’m going with this right? So building new versions of these devices with more powerful processors would allow local processing of AI models, yeah. However, this would drive prices up significantly. So, while the Echo Pop has always been a budget-friendly way to add Alexa to your home, a new Echo Pop with AI built-in would be a different beast altogether, likely costing much more due to the added processing power it would need.

- Offer Cloud-Based AI Services with a Subscription: Alternatively, these companies could opt to keep the hardware simple and run these AI models on the cloud, allowing even low-power devices to tap into advanced AI capabilities without needing high processing power on the device itself, which would mean that you’d just get an update on your Echo Pop, great bargain right, but at what cost? This route raises significant concerns:

- Privacy and Security Risks: Cloud-based solutions require data to be transmitted and processed externally, raising potential privacy issues. Many users are uneasy about sending potentially sensitive conversations over the internet to be processed by third-party servers. People are already concerned about the models running on their “AI” phones, which has resulted in manufacturers limiting most of these fancy AI features to their highest performing models so that they can locally run them reducing the concerns, now with these digital assistant devices it’s a whole different story.

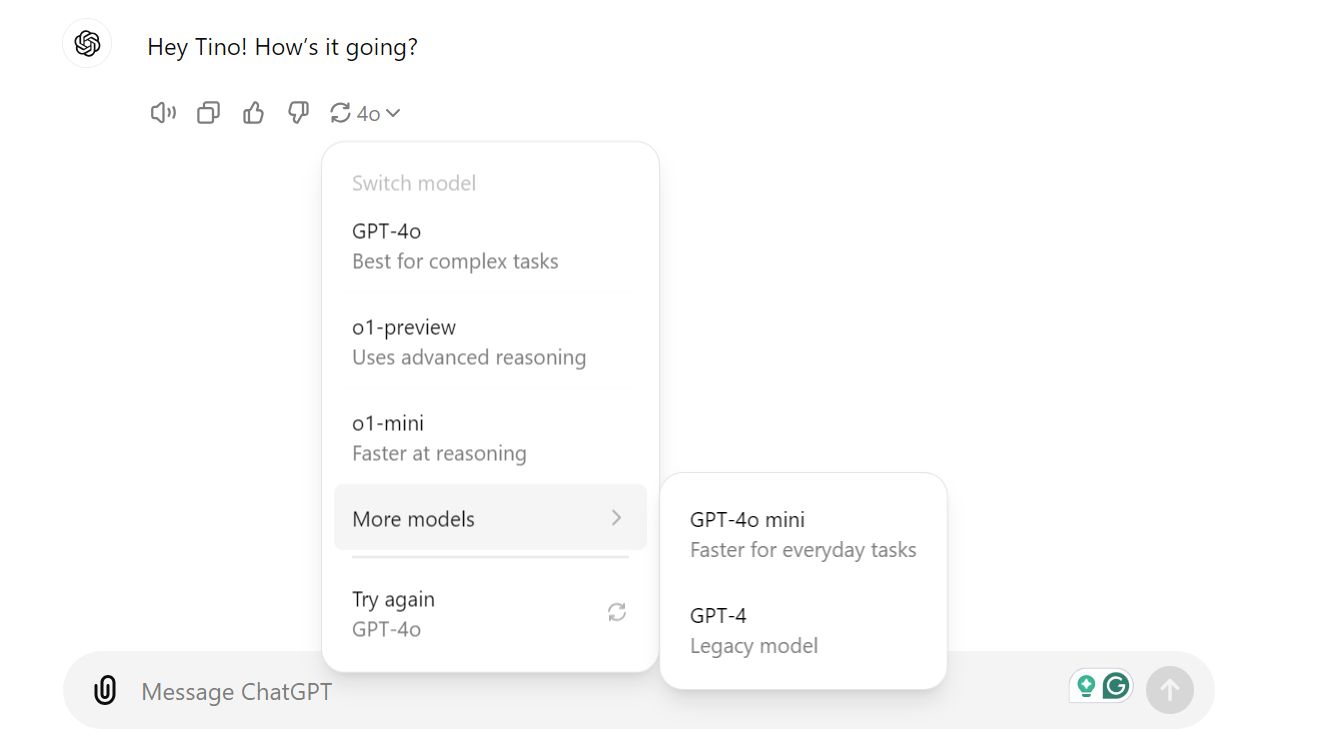

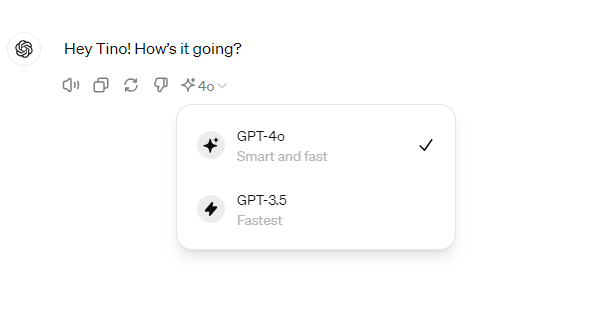

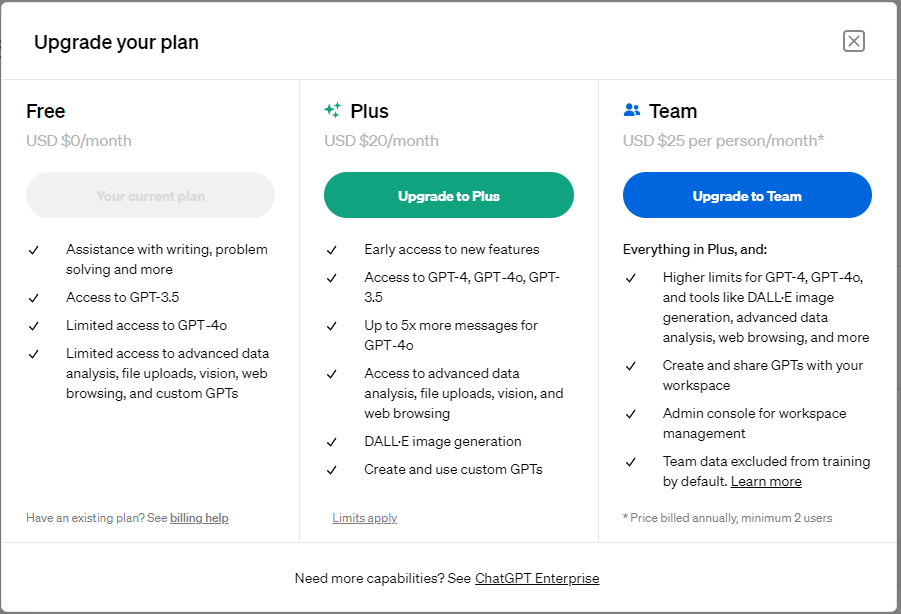

- Subscription Costs: To cover the cost of running powerful AI models in the cloud, companies are likely to introduce subscription plans. This would add yet another monthly fee for users who may already be feeling subscription fatigue, especially as so many services now rely on recurring fees.

Here’s why Legacy Assistants Are Falling Behind

One of the more subtle effects of this AI hardware dilemma is the growing distinction between these legacy digital assistants and the next-gen super smart LLMs. People accustomed to Alexa’s simple skills or Google Assistant’s straightforward commands might quickly feel underwhelmed by the limitations of these older models as the new ones become capable of nuanced, context-aware interactions which feel more personal. You know, I’d never want to go back to the legacy assistant as soon as I’m able to have a full-on convo with my assistant about how my DMs are dry across all my socials, that’s just a whole different experience.

Despite all the promise, the AI models aren’t quite there yet. From my own experience, Gemini, Google’s AI model, has yet to fully integrate the practical, everyday usability of Google Assistant. It’s still in its early stages, so while it may be able to chat about a broad range of topics, it sometimes struggles with tasks that Assistant handles smoothly, it can’t even skip to the next song if my phone’s screen is switched off. So in other words, the switch to a fully AI-driven assistant isn’t seamless, which might encourage users to hang onto their legacy assistants for now, even if they’re not as fancy. I’m the *users* by the way.

Why the Price and Privacy Trade-Off Could Slow Adoption

With these new fancy AI-powered models, there’s likely to be a split in the market:

- Budget-conscious users may stick with legacy devices or forego digital assistants altogether if prices rise significantly.

- Privacy-minded users might avoid cloud-based AI options due to security concerns, even if that means missing out on advanced capabilities.

- Tech enthusiasts willing to pay for the latest and greatest will have options to buy more powerful (and expensive) devices, or they’ll sign up for subscriptions to access cloud-based services. We’ve seen people buying the Vision Pro, so it’s nothing new when it comes to enthusiasts.

This division could lead to a somewhat divided ecosystem, where advanced, AI-capable assistants coexist with simpler budget-friendly models, and there’s nothing wrong with that, that’s exactly what the smartphone space is like and has been like since, well, the beginning. But unlike smartphones, it could be a tricky balancing act for the tech companies behind these assistants. Pricing the new, advanced models too high could result in slower adoption rates, while heavy reliance on subscription models could alienate users who are already juggling multiple monthly fees.

Conclusion

So as the top tech guys push forward with integrating advanced AI into their digital assistants, we as users face a complicated choice: stick with legacy models that are cheaper but limited in functionality or pay more, either upfront for new hardware or through monthly subscriptions, to access the latest AI-powered versions. By the way, this is just my speculation of how the market might be like in the upcoming years or months maybe, not how it is supposed to be like.

Want more tech insights? Don’t miss out—subscribe to the Tino Talks Tech newsletter!